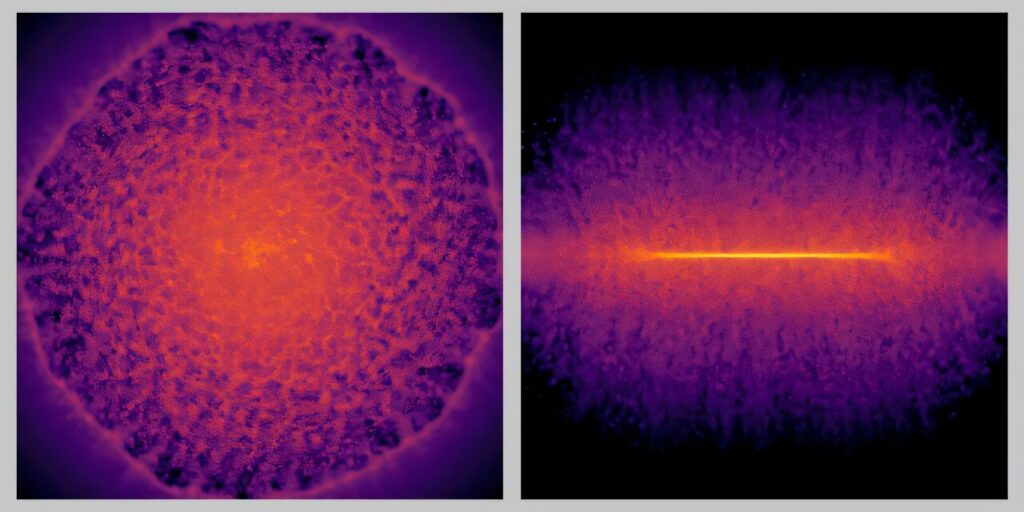

Scientists have achieved something that once seemed nearly impossible: they have created the first computer simulation of the Milky Way that represents more than 100 billion individual stars. This massive model follows the galaxy’s behavior over a period of 10, 000 years and was made possible by combining artificial intelligence with some of the world’s most powerful supercomputers. The research was published in Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, and it marks a major step forward in both astronomy and computing. For many years, astrophysicists have wanted to simulate the Milky Way in fine detail, down to the level of individual stars. Such a simulation could help scientists test ideas about how galaxies form, how they change over time, and how stars are born and die. But the Milky Way is incredibly complex. It contains over 100 billion stars, along with clouds of gas and dust, dark matter, and explosive events like supernovae. All of these elements interact through forces such as gravity and pressure, and they do so on very different scales of time and space. Capturing all of this accurately in a computer model has been far beyond our reach. Until now, even the best simulations had to take shortcuts. Instead of modeling each star, one “particle” in the simulation often represented a group of about 100 stars. This meant scientists could study large-scale features of a galaxy, but they could not see what was happening to individual stars. Another major problem was time. To see fast events like a supernova, the simulation needs very small time steps, which dramatically increases the amount of computing power required. A traditional simulation detailed enough to handle individual stars in the Milky Way would take hundreds of hours to calculate just one million years of evolution. To simulate a billion years that way would require more than three decades of nonstop computing. Simply adding more computer processors is not a good solution, because supercomputers use huge amounts of energy, and efficiency drops as more processors are added. To solve this problem, researcher Keiya Hirashima and colleagues in Japan and Spain developed a smarter approach. They created an AI-based “surrogate model” that was trained on very detailed simulations of supernova explosions. This AI learned how gas behaves after a star explodes and could quickly predict these effects without running a full physical calculation each time. By letting AI handle the most demanding small-scale processes, the main simulation could focus on the overall structure and movement of the galaxy. When tested on the Fugaku and Miyabi supercomputers, the new method showed remarkable speed. It could simulate one million years of galaxy evolution in just 2. 78 hours. At that rate, one billion years of change could be calculated in around 115 days instead of 36 years. This breakthrough is not only important for understanding our own galaxy. The same method could also help scientists study other complex systems, such as global weather patterns and climate change. By combining AI with high-performance computing, researchers now have a powerful new tool for exploring the most complicated processes in nature and uncovering how the universe, and even the conditions for life, came to be.

https://knowridge.com/2025/11/scientists-recreate-100-billion-stars-in-a-virtual-milky-way-for-the-first-time/

Scientists recreate 100 billion stars in a virtual Milky Way for the first time